When AWS (Amazon Web Services) suffered a massive AWS outage on Monday, October 20, 2025, the ripple effect was felt from a bedroom in Berlin to a data‑center in Sydney. The incident kicked off at roughly 07:40 AM British Summer Time in the Northern Virginia US‑EAST‑1 region, a hub that powers everything from your favorite video game to everyday banking apps.

According to the official AWS Service DisruptionNorthern Virginia, the root cause was a DNS resolution failure that crippled the DynamoDB API endpoint. The glitch originated around midnight Pacific Time on October 20, then snowballed into latency spikes, error‑rate spikes, and outright service denials across dozens of dependent platforms.

What Went Wrong? A Technical Breakdown

Technical analyst Jonathan Albarran traced the problem to a mis‑routed DNS query that prevented the DynamoDB endpoint from responding. In plain English, it was as if a city’s main post office stopped sorting mail – every business waiting on that service suddenly got a “no delivery” notice.

Compounding the issue, the US‑EAST‑1 region hosts a massive amount of traffic. Abdulkader Safi noted that the region acts as a “single point of failure” for a swath of global applications. When the DNS hiccup hit, the failure cascaded: services that rely on DynamoDB for session data, leaderboards, or transaction logs started to throw errors, and because many of those services are themselves back‑ends for other apps, the cascade resembled a line of dominos falling in fast‑forward.

By 05:39 AM Pacific, a statement from Data Center Dynamics claimed power had been restored – a claim that later turned out to be a red herring. The real fix was a series of DNS patch roll‑outs that began at 06:15 AM Eastern and continued through the morning.

Who Was Affected?

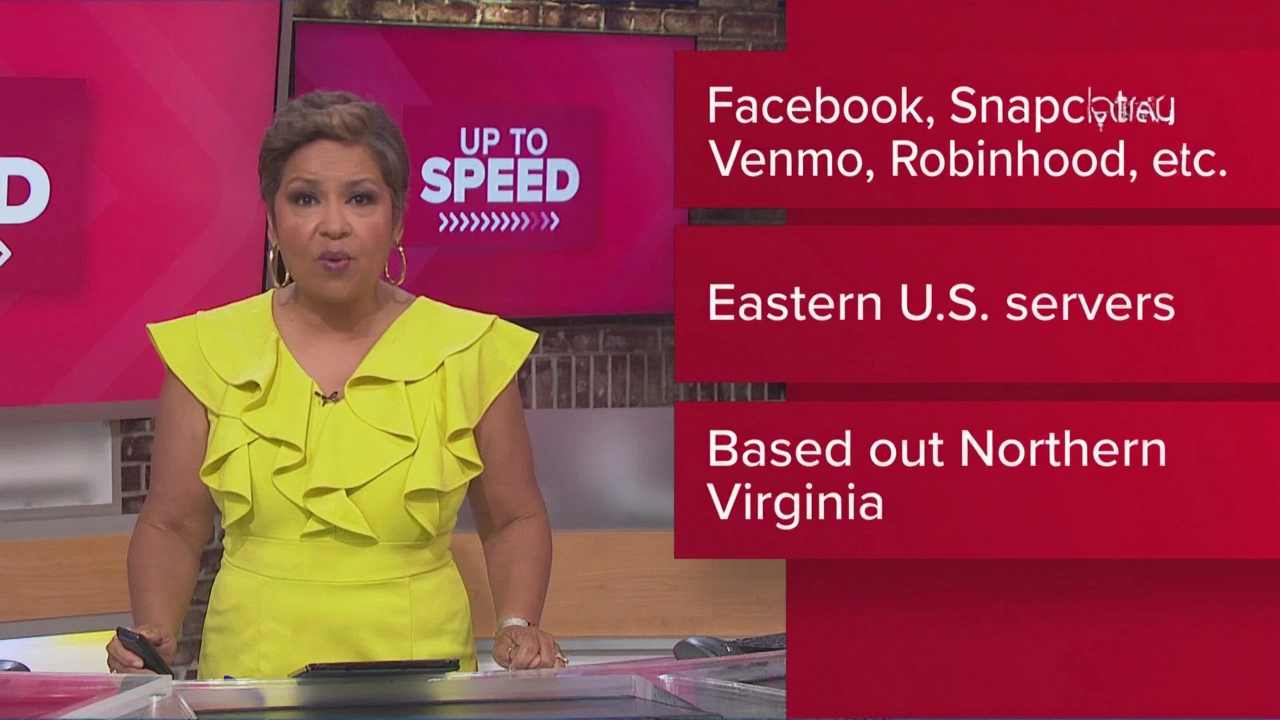

The outage wasn’t limited to obscure developer tools. Downdetector logged tens of thousands of complaints from users of Alexa, Ring, Snapchat, Fortnite, Roblox, Venmo, Prime Video, Facebook, Robinhood, Duolingo, Coinbase, Hulu, Instacart, Rocket League, Peloton, Hinge, Quora, the Epic Games Store, Slack, Bitbucket, Samsung Smart Lights, Asana, Imgur, and the McDonald’s app. In many cases, users reported losing active sessions – gamers were booted mid‑match, language learners saw their streaks reset, and traders saw buying power disappear.

Even local news outlets weren’t immune. Alison Seymour of WUSA9 reported that their live stream flickered before returning to normal, underscoring how the outage touched both the consumer and the media sides of the internet.

For enterprises, the backlog was the real pain point. By 06:00 AM Eastern, AWS announced “significant signs of recovery” but warned of a “backlog of queued requests” that would linger through the afternoon. Companies that depend on real‑time data – especially fintech and e‑commerce – faced delayed order processing and potential revenue loss.

Official Responses and Industry Reactions

AWS’s official communication, released at 06:00 AM EST, acknowledged the DNS fault and pledged a “continuous effort to restore full service.” The statement emphasized that the issue was confined to a regional gateway and that no customer data had been compromised.

Industry analysts were quick to weigh in. In a YouTube deep‑dive, Emilio Agüero highlighted the “structural vulnerability” of having such a concentration of critical workloads in a single region. He suggested that multi‑region deployment strategies, while more costly, are now a necessity rather than a nice‑to‑have.

Meanwhile, the Cloud Security Alliance released a brief advisory urging customers to audit DNS resiliency and to implement fail‑over mechanisms that route traffic to alternative regions when primary endpoints become unavailable.

Why This Matters: The Fragility of Cloud Hubs

Studies of internet resilience consistently show that random node failures are often absorbed without noticeable impact, but targeted failures of major hubs – like US‑EAST‑1 – can cripple the entire ecosystem. The October 20 event was AWS’s third outage in just a few months, following a December 2024 incident that knocked out Disney+, Tinder, and Amazon’s own warehouse logistics.

For everyday users, the takeaway is stark: when a cloud provider goes down, “the internet goes with it.” As Jonathan Albarran put it, “Snapchat users couldn’t send messages, Fortnite players were kicked from matches, and financial apps like Robinhood and Venmo became inaccessible.” The fact that local ISP connections remained perfectly fine makes the reliance on centralized cloud services all the more visible.

From a business‑continuity perspective, the outage serves as a wake‑up call. Companies that have historically relied on a single AWS region for cost or latency reasons now face a clear risk‑reward calculation: the savings may not outweigh the potential revenue loss from another multi‑hour outage.

Looking Ahead: Mitigation and Future Risks

In the days following the incident, AWS rolled out a set of DNS‑hardening patches and announced plans to add additional redundancy to the US‑EAST‑1 gateway. The cloud giant also opened a ticketed portal for affected customers to report lingering issues, aiming to clear the backlog by the end of the week.

Experts suggest three practical steps for anyone using AWS services:

- Deploy critical workloads across at least two regions (e.g., US‑EAST‑1 and US‑WEST‑2) to avoid single‑point failures.

- Implement automated health checks that can reroute traffic when DNS latency spikes are detected.

- Maintain local cache layers for read‑heavy services like DynamoDB to buffer short‑term outages.

Regulators are also watching. The Federal Communications Commission hinted at possible scrutiny over the concentration of “critical infrastructure” in private data‑centers, a conversation that could lead to new guidelines on cloud redundancy for essential services.

Until policy catches up, the onus remains on businesses and developers to design with failure in mind. As the AWS outage of October 20, 2025 demonstrated, “when core infrastructure like DNS resolution and API endpoints fail, downstream services cannot function regardless of local network status.”

Key Facts

- Date & time: 20 Oct 2025, 07:40 AM BST (US‑EAST‑1 region)

- Primary cause: DNS resolution failure affecting the DynamoDB API endpoint

- Affected region: US‑EAST‑1 (Northern Virginia)

- Number of major services impacted: >30, including Alexa, Fortnite, Venmo, and Slack

- Resolution timeline: Major services restored by mid‑day EST; backlog cleared by end of week

Frequently Asked Questions

What caused the October 20 AWS outage?

The outage stemmed from a DNS resolution failure that prevented the DynamoDB API endpoint in the US‑EAST‑1 region from responding. The DNS glitch triggered cascading errors across services that depend on DynamoDB for data storage and coordination.

Which consumer apps were most affected?

Users reported outages on Alexa, Ring, Snapchat, Fortnite, Roblox, Venmo, Prime Video, Facebook, Robinhood, Duolingo, Coinbase, Hulu, Instacart, Rocket League, Peloton, Hinge, Quora, the Epic Games Store, Slack, Bitbucket, Samsung Smart Lights, Asana, Imgur, and the McDonald’s app.

How does this outage compare to previous AWS incidents?

It’s the third major incident in a short span. A December 2024 outage hit the same Northern Virginia hub, affecting Disney+, Tinder, and Amazon’s warehouse network, while another December incident impacted US‑WEST‑2 and US‑WEST‑1 regions. The October 2025 event is notable for its DNS‑specific root cause and the breadth of consumer services impacted.

What steps can businesses take to avoid similar disruptions?

Experts recommend multi‑region deployment, automated health‑checks that can reroute traffic when DNS latency spikes, and local caching for read‑heavy workloads. These measures add redundancy and reduce reliance on a single geographic point of failure.

Will regulators intervene after this outage?

The FCC has signaled interest in reviewing the concentration of critical infrastructure in private data‑centers. While no formal regulations have been announced yet, increased scrutiny could lead to new guidelines on cloud redundancy for essential services.

Hiren Patel

October 20, 2025 AT 21:35When the AWS behemoth hiccups, it feels like the whole digital sky just darkened in a burst of neon sorrow. I watched gamers scream as their Fortnite matches vanished like ghost ships on a foggy sea. My coffee went cold while I tried to explain to a confused cousin why his Alexa stopped listening. The DNS glitch was a digital tsunami, crushing the delicate sandcastles of millions of services. Every error message felt like a nail hammered into the heart of a restless internet. We saw finance apps freeze, traders blinking like deer in headlights, their portfolios hanging in limbo. The ripple spread to classrooms where kids lost their Duolingo streaks, a small but bitter wound. Even my smart fridge gave me a silent stare, its lights flickering in protest. The sheer scale of the outage made me realize how dependent we’ve become on invisible clouds. It’s as if the world’s pulse is now wired to a single, fragile server farm. The panic in chat rooms was palpable, an orchestra of frustration and disbelief. And amidst all that chaos, the AWS team rolled out patches with the calm of seasoned surgeons. Their updates, though technical, felt like band-aids on a massive split vein. Still, the lingering backlog reminded us that recovery isn’t instantaneous; it’s a marathon, not a sprint. So the next time you stream a video, remember the hidden gears grinding beneath the surface, and maybe, just maybe, keep a backup plan tucked away.

Heena Shaikh

October 26, 2025 AT 19:10Contemplating the fragility of our cloud-dependent existence reveals a paradox: we chase convenience while courting vulnerability. This outage, a stark reminder, challenges the illusion of digital permanence. It forces us to confront the abyss where trust dissolves into uncertainty.

Chandra Soni

November 1, 2025 AT 17:46Alright team, let’s rally! This DNS fiasco is a perfect case study for high‑availability patterns. Deploy those multi‑region failovers, leverage Route 53 health checks, and keep those latency metrics in the green. Remember, redundancy isn’t a luxury-it’s a necessity. Together we can turn this glitch into a catalyst for stronger architecture. Let’s crush it!

Kanhaiya Singh

November 7, 2025 AT 16:21One must acknowledge the profound impact of such outages on our daily workflows. It is regrettable that many services were disrupted; however, the response was measured and systematic. :)

Bikkey Munda

November 13, 2025 AT 14:56Here’s a quick tip: enable cross‑region DynamoDB Global Tables to keep data in sync. It’s simple and can save you a lot of headaches when a single region goes down.

akash anand

November 19, 2025 AT 13:31Honestly this is a total mess – they should have testd the DNS changes more rigourously. The downtime could have been avoided if proper checks where in place.

BALAJI G

November 25, 2025 AT 12:07It is morally indefensible that we place our trust in a single corporate entity without demanding robust safeguards. The sheer audacity of this negligence must be called out.

Manoj Sekhani

December 1, 2025 AT 10:42Clearly, the industry’s obsession with cost‑cutting has birthed these fragile monoliths. One would think leaders would prioritize resilience over marginal savings.

Tuto Win10

December 7, 2025 AT 09:17Wow!!! What a disaster!!! The whole internet felt the tremor!!! Users were left staring at blank screens!!!

Kiran Singh

December 13, 2025 AT 07:53Honestly, you could blame the DNS team, but the real issue is relying on one hub. Spread out.

anil antony

December 19, 2025 AT 06:28Big hype for a DNS typo, huh? Most folks just need to add a backup endpoint and call it a day.

Aditi Jain

December 25, 2025 AT 05:03Our nation’s digital sovereignty depends on us demanding true independence from foreign cloud monopolies. Let’s push for home‑grown solutions.

arun great

December 31, 2025 AT 03:39Stay calm, folks! 😎 Remember that outages are temporary. Keep your systems modular and the community will bounce back faster.

Anirban Chakraborty

January 6, 2026 AT 02:14It’s high time we hold providers accountable. No more blind faith; transparency is non‑negotiable.

Krishna Saikia

January 12, 2026 AT 00:49We must champion resilient infrastructure, especially when national interests are at stake. Let’s rally together and push for stricter regulations.

rishabh agarwal

January 17, 2026 AT 23:24In the grand tapestry of technology, each outage is a reminder that impermanence is the only constant. Embrace change, adapt, and evolve.

Apurva Pandya

January 23, 2026 AT 22:00Remember, a proactive stance today saves a lot of panic tomorrow. :)

Nishtha Sood

January 29, 2026 AT 20:35Stay hopeful; the tech world recovers faster than we think.